A fast and modern Python SOAP client

NOT ENOUGH SUDS IN WASHING MACHINE. For LG front load washers, a lack of visible soap suds is not a problem with the machine. Seeing little or no suds should be considered normal. This is due to the amount of water the machine uses. As a result, LG washers require that you use a HE detergent. The suds project is a python soap web services client lib. Suds leverages python meta programming to provide an intuative API for consuming web services. Runtime objectification of types defined in the WSDL is provided without class generation.

- Compatible with Python 3.6, 3.7, 3.8 and PyPy

- Build on top of lxml and requests

- Support for Soap 1.1, Soap 1.2 and HTTP bindings

- Support for WS-Addressing headers

- Support for WSSE (UserNameToken / x.509 signing)

- Support for asyncio via httpx

- Experimental support for XOP messages

A simple example:

Quick Introduction¶

Zeep inspects the WSDL document and generates the corresponding code to use theservices and types in the document. This provides an easy to use programmaticinterface to a SOAP server.

The emphasis is on SOAP 1.1 and SOAP 1.2, however Zeep also offers support forHTTP Get and Post bindings.

Parsing the XML documents is done by using the lxml library. This is the mostperformant and compliant Python XML library currently available. This resultsin major speed benefits when processing large SOAP responses.

The SOAP specifications are unfortunately really vague and leave a lot ofthings open for interpretation. Due to this there are a lot of WSDL documentsavailable which are invalid or SOAP servers which contain bugs. Zeep tries tobe as compatible as possible but there might be cases where you run intoproblems. Don’t hesitate to submit an issue in this case (but please firstread Reporting bugs).

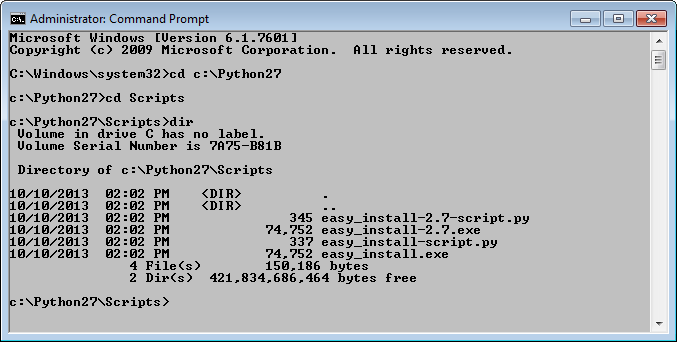

Installation¶

Zeep is a pure-python module. This means that there is no C code which needsto be compiled. However the lxml dependency does contain C code since it useslibxml2 and libxslt. For linux/bsd this means you need to install libxml2-devand libxslt-dev packages. For Windows this is unfortunately a bit morecomplicated. The easiest way is to install lxml via wheel files since thatcontains already compiled code for your platform.

To install wheel files you need a recent pip client. Seehttps://pip.pypa.io/en/stable/installing/ how to install pip on your platform.

If you have installed pip then run:

Note that the latest version to support Python 2.7, 3.3, 3.4 and 3.5 is Zeep 3.4,install via pip install zeep3.4.0

This assumes that there are wheel files available for the latest lxml release.If that is not the case (https://pypi.python.org/pypi/lxml/) then firstinstall lxml 4.2.5 since that release should have the wheel files for allplatforms:

When you want to use wsse.Signature() you will need to install the pythonxmlsec module. This can be done by installing the xmlsec extras:

For the asyncio support in Python 3.6+ the httpx module is required, thiscan be installed with the async extras:

Getting started¶

The first thing you generally want to do is inspect the wsdl file you need toimplement. This can be done with:

See python-mzeep--help for more information about this command.

Note

Zeep follows semver for versioning, however bugs can always occur.So as always pin the version of zeep you tested with(e.g. zeep4.0.0’).

A simple use-case¶

To give you an idea how zeep works a basic example.

The WSDL used above only defines one simple function (Method1) which ismade available by zeep via client.service.Method1. It takes two argumentsand returns a string. To get an overview of the services available on theendpoint you can run the following command in your terminal.

Note

Note that unlike suds, zeep doesn’t enable caching of the wsdl documentsby default. This means that everytime you initialize the client requestsare done to retrieve the wsdl contents.

User guide¶

- The Client object

- Settings

- Transports

- Datastructures

- WS-Security (WSSE)

- Plugins

- Reporting bugs

API Documentation¶

- Public API

- Internals

Changelog¶

- Changelog

This web page will no longer be updated. Seethe author's web pagesfor further releases.

RLCSA [4, 3, 7]is a compressed suffix array implementation that has been optimized for highlyrepetitive text collections. Examples of such collections include version control dataand individual genomes. This implementation also serves as a testbed for many techniquesused with compressed suffix arrays.The most up-to-date description of RLCSA can be found in [7].

The current version includes experimental support for:

- LCP information [5]

- Distribution-aware sampling [6, 9]

- Adaptive distribution-aware sampling

- Space-efficient document listing [8]

See README in the package for further information.The implementation is available for download under the MIT / X11 License.

There is also a separate package containing implementations of additional document retrieval algorithms:

- Brute-force document listing and top-k document retrieval.

- New variants of PDL for document listing and top-k document retrieval [10].

- Several variants of Sadakane's algorithm for document listing.

Full experimental results from [10] are also included in the document listing package.

News

- 2014-07-23 This web page will no longer be updated. See the author's web pages for further releases.

- 2014-04-18 An updated version of the document listing package, with a minor bug fix to RLCSA to support it.

- 2014-01-11 A new version of the document listing package, and some new datasets from [10].

- 2014-01-10 A new version with a number of technical changes. Required by January 2014 version of the doclist package.

- 2013-05-21 A separate package with some additional document listing algorithms.

- 2013-05-20 A new version with improved document listing support.

- 2013-04-10 A new version with document listing support.

- 2013-01-21 A new version with parallel sampling.

- 2012-12-07 A new version with various changes accumulated over time.

- 2012-11-05 More test data is now available online.

- 2012-10-12 More test data is now available online.

- 2012-02-14 More space-efficient construction. Option to use a succinct bit vector to mark sampled positions.

- 2011-08-23 A minor update to support the August 2011 version of GCSA.

- 2011-05-17 Support for distribution-aware sampling and an improved low-level interface for external modules.

- 2011-01-17 A new version that supports the interface used in recent GCSA experiments.

- 2010-11-25 Added a list of the data sets used in the experiments.

- 2010-10-13 A new version with libstdc++ parallel mode support and performance improvements.

- 2010-03-29 A new version with some compatibility and interface updates.

- 2010-01-11 A new version with experimental support for LCP information.

- 2009-11-25 A new version of RLCSA is available. This version includes bug fixes, more functionality and a bit cleaner interface.

- 2009-06-15 The implementation of RLCSA is now available.

Downloads

Current versions

- RLCSA (April 2014)

- Document listing (April 2014)

RLCSA

- April 2014. A minor bug fix version to support the April 2014 version of the document listing package.

- January 2014. This version includes a more consistent interface, faster SA construction for small alphabets, and some additions to the library. Required by January 2014 version of the doclist package.

- May 2013. This version contains a simplified construction program for a single file as well as improved document listing support. Most importantly, the grammar rules in precomputed document listing are no longer run-length encoded if that increases their size.

- April 2013. This version includes preliminary support for document listing using precomputed answers. A modified web graph compressor (Hernández, Navarro; SPIRE 2012) is required for building the document listing structure. The source code for it is available upon request. This version was used for the experiments in [8].

- January 2013. This version includes a multi-threaded sampling algorithm for finding (almost) optimal distribution-aware samples quickly. There is also some undocumented work on document listing with highly repetitive data. This version was used in the experiments in [9].

- December 2012. This version has many technical changes from the earlier versions. This version is required by the December 2012 version of GCSA.

- February 2012. Construction algorithm for partial indexes changed from multiple Larsson-Sadakane algorithms to a parallel prefix-doubling algorithm. Succinct bit vectors can be used instead of gap encoded ones to e.g. mark sampled positions. This version was used in the experiments in [7].

- August 2011. Minor updates to some library functions. This version is required by the August 2011 version of GCSA.

- May 2011. The low-level interface has been improved in this version. Weighted / distribution-aware sampling is now also supported. The test program has also been extended.

- January 2011. This version has a low-level interface compatible with that of GCSA. Also, the default sample rate was changed to more realistic 128, and the test program supports patterns in Pizza&Chili format.

- October 2010. This version supports libstdc++ parallel mode as an alternative to MCSTL. There are also some speed optimizations (an alternative encoding for run-length encoded bit vectors and improved display()).

- March 2010. Fixed some problems when compiling with a new version of g++. RLCSA now uses iterators and const operations to make parallelization easier.

- January 2010. Includes experimental support for LCP information. This version was used in the experiments reported in [5].

- November 2009. A cleaned up version with more functionality.

- June 2009. This version was used in the experiments reported in [3]. There are some bugs that were introduced when cleaning up the code. You should use the November 2009 version instead.

Document listing

- April 2014. A new variant of top-k PDL. Some technical updates and bug fixes. This package was used for the experiments in [10]. The package also includes full experimental results from [10]. Requires April 2014 version of RLCSA.

- January 2014. A major update for the experiments in [10]. Requires January 2014 version of RLCSA.

- May 2013. This is the initial release of the document listing package. The ILCP implementation is a rewrite of the one used in [8]. The package requires May 2013 version of RLCSA.

Suds Library Required Documents

Data

Suds Library Required Items

The following data sets were used in experiments with RLCSA. Some of them are available fromthe Pizza&Chili Corpus (Chile,Italy).

- DBLP archives and queries (available upon request) [6 (description), 9].

- DNA sequences (with synthetic mutations in some cases) [1 (description), 2, 4, 5, 7, 10].

- English language texts [5, 7, 10].

- Finnish language words [10].

- Human reference genome (NCBI build 34) [3].

- Influenza genomes from NIH [8, 10].

- Source code for OpenSSH version 4.7p1 and versions up to that [1, 2, 4].

- Protein sequences [3].

- Another set of protein sequences [10].

- Saccharomyces cerevisiae and Saccharomyces paradoxus genomes from the Wellcome Trust Sanger Institute [2 (description), 4, 7].

- Web queries (available upon request) and pages [6 (description), 9, 10].

- Wikipedia archives: fiwiki, enwiki [3 (description), 5, 7, 8 (description), 10].

The full test environment from [10] is also available upon request. Note that setting it up requires much manual work, and some of the implementations use 32-bit libraries.

References

Suds Library Required Books

- Jouni Sirén, Niko Välimäki, Veli Mäkinen, and Gonzalo Navarro: Run-Length Compressed Indexes Are Superior for Highly Repetitive Sequence Collections.

Proc. SPIRE 2008, Springer LNCS 5280, pp. 164-175, Melbourne, Australia, November 10-12, 2008. - Veli Mäkinen, Gonzalo Navarro, Jouni Sirén, and Niko Välimäki: Storage and Retrieval of Individual Genomes.

Proc. RECOMB 2009, Springer LNCS 5541, pp. 121-137, Tucson, Arizona, USA, May 18-21, 2009.[Article] [Preprint] - Jouni Sirén: Compressed Suffix Arrays for Massive Data.

Proc. SPIRE 2009, Springer LNCS 5721, pp. 63-74, Saariselkä, Finland, August 25-27, 2009. - Veli Mäkinen, Gonzalo Navarro, Jouni Sirén, and Niko Välimäki: Storage and Retrieval of Highly Repetitive Sequence Collections.

Journal of Computational Biology 17(3):281-308, 2010.[Article] [Preprint] Conference versions: [1, 2] - Jouni Sirén: Sampled Longest Common Prefix Array.

Proc. CPM 2010, Springer LNCS 6129, pp. 227-237, New York, USA, June 21-23, 2010. - Paolo Ferragina, Jouni Sirén, and Rossano Venturini: Distribution-Aware Compressed Full-Text Indexes.

Proc. ESA 2011, Springer LNCS 6942, pp. 760-771, Saarbrücken, Germany, September 5-7, 2011.[Article] [Preprint] - Jouni Sirén: Compressed Full-Text Indexes for Highly Repetitive Collections (PhD Thesis).

Department of Computer Science, Series of Publications A, Report A-2012-5, University of Helsinki, June 2012. - Travis Gagie, Kalle Karhu, Gonzalo Navarro, Simon J. Puglisi, and Jouni Sirén: Document Listing on Repetitive Collections.

Proc. CPM 2013, Springer LNCS 7922, pp. 107-119, Bad Herrenalb, Germany, June 17-19, 2013.[Article] - Paolo Ferragina, Jouni Sirén, and Rossano Venturini: Distribution-aware compressed full-text indexes.

Algorithmica 67(4):529-546, 2013. - Gonzalo Navarro, Simon J. Puglisi, and Jouni Sirén: Document Retrieval on Repetitive Collections.

Accepted to ESA 2014.[Preprint]